# Let's also make some changes to accommodate the weaker locally hosted LLM

QA_TIMEOUT=120 # Set a longer timeout, running models on CPU can be slow

# Always run search, never skip

DISABLE_LLM_CHOOSE_SEARCH=True

# Don't use LLM for reranking, the prompts aren't properly tuned for these models

DISABLE_LLM_CHUNK_FILTER=True

# Don't try to rephrase the user query, the prompts aren't properly tuned for these models

DISABLE_LLM_QUERY_REPHRASE=True

# Don't use LLM to automatically discover time/source filters

DISABLE_LLM_FILTER_EXTRACTION=True

# Uncomment this one if you find that the model is struggling (slow or distracted by too many docs)

# Use only 1 section from the documents and do not require quotes

# QA_PROMPT_OVERRIDE=weak

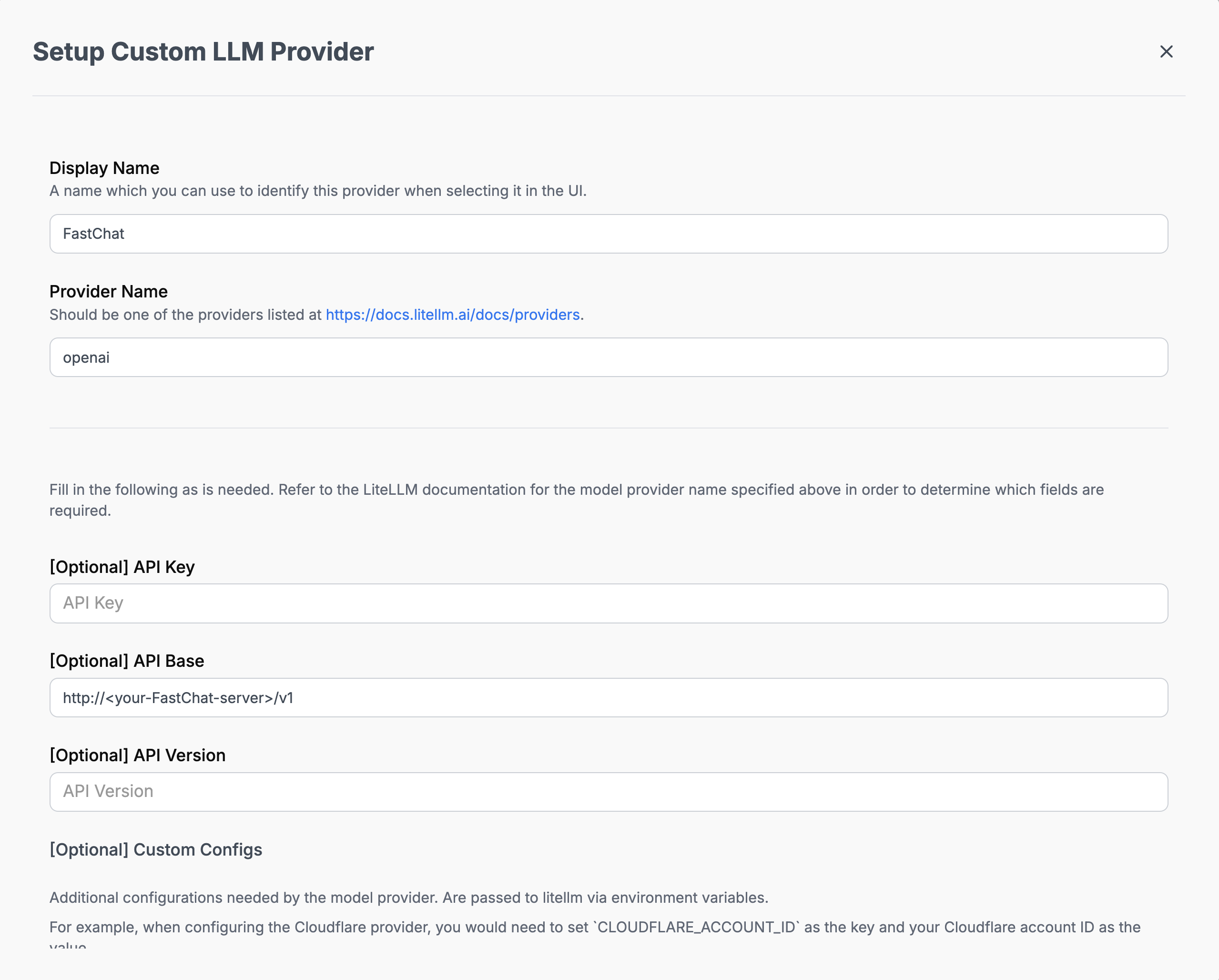

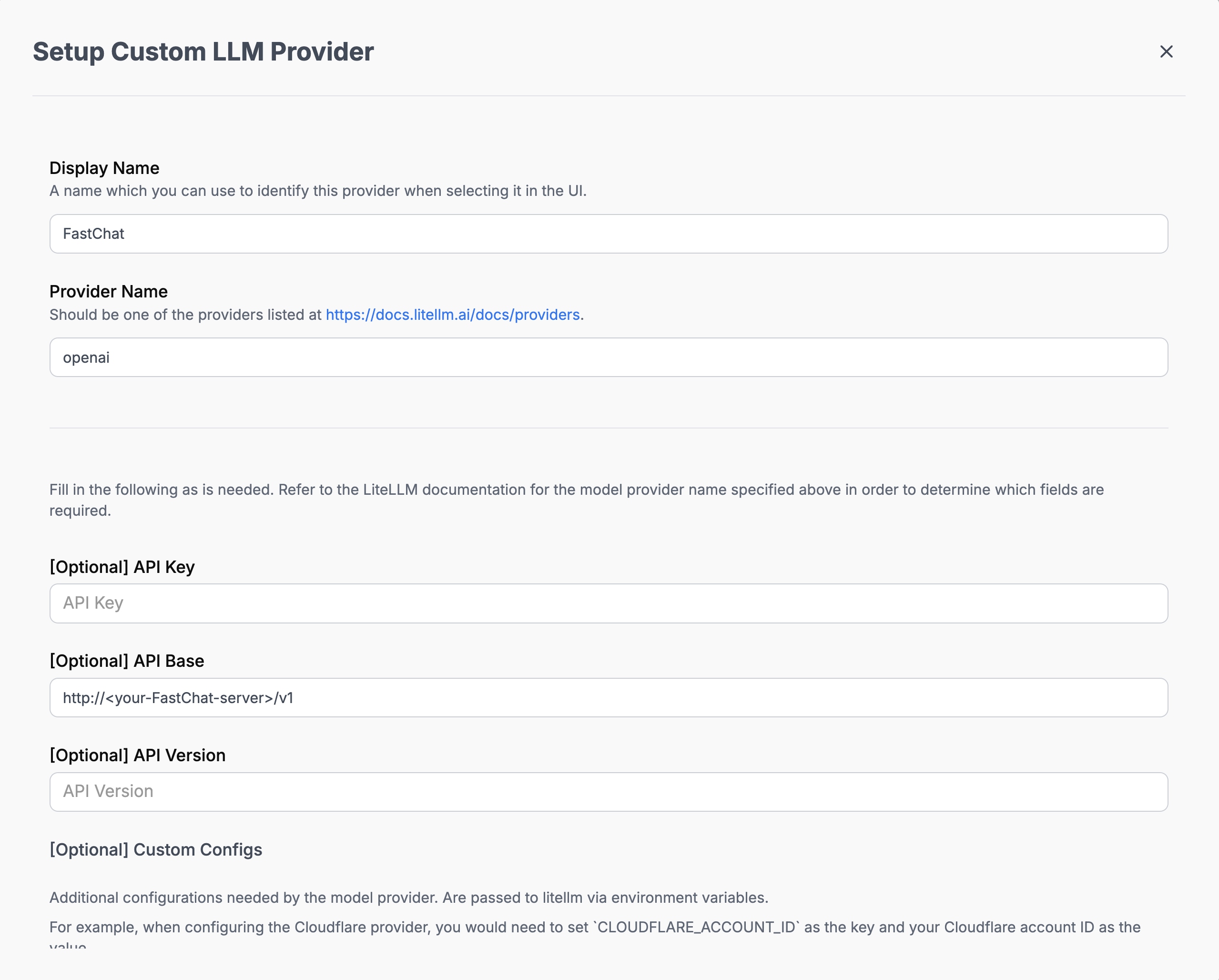

You may also want to update some of the environment variables depending on your model choice / how

you’re running FastChat (e.g. on CPU vs GPU):

You may also want to update some of the environment variables depending on your model choice / how

you’re running FastChat (e.g. on CPU vs GPU):